Status as a Service (StaaS)

Editor's Note 1: I have no editor.

Editor’s Note 2: I would like to assure new subscribers to this blog that most my posts are not as long as this one. Or as long as my previous one. My long break from posting here means that this piece is a collection of what would’ve normally been a series of shorter posts. I put section titles below, so skip any that don’t interest you. My short takes are on Twitter. All that said, I apologize for nothing.

Editor's Note 3: I lied, I apologize for one thing, and that is my long writing hiatus. Without a work computer, I had to resort to using my 7 year old 13" Macbook Pro as my main computer, and sometime last year my carpal tunnel syndrome returned with a vengeance and left my wrists debilitated with pain. I believe all of you who say your main computer is a laptop or, shudder, an iPad, but goodness gracious I cannot type on a compact keyboard for long periods of time without having my hands turn into useless stumps. It was only the return to typing almost exclusively on my old friend the Kinesis Advantage 2 ergo keyboard that put me back in the game.

Editor’s Note 4: I was recently on Patrick O'Shaughnessy's podcast Invest Like the Best, and near the end of that discussion, I mentioned a new essay I'd been working on about the similarities between social networks and ICO's. This is that piece.

Status-Seeking Monkeys

"It is a truth universally acknowledged, that a person in possession of little fortune, must be in want of more social capital."

So wrote Jane Austen, or she would have, I think, if she were chronicling our current age (instead we have Taylor Lorenz, and thank goodness for that).

Let's begin with two principles:

People are status-seeking monkeys*

People seek out the most efficient path to maximizing social capital

* Status-Seeking Monkeys will also be the name of my indie band, if I ever learn to play the guitar and start a band

I begin with these two observations of human nature because few would dispute them, yet I seldom see social networks, some of the largest and fastest-growing companies in the history of the world, analyzed on the dimension of status or social capital.

It’s in part a measurement issue. Numbers lend an air of legitimacy and credibility. We have longstanding ways to denominate and measure financial capital and its flows. Entire websites, sections of newspapers, and a ton of institutions report with precision on the prices and movements of money.

We have no such methods for measuring the values and movement of social capital, at least not with anywhere near the accuracy or precision. The body of research feels both broad and yet meager. If we had better measures besides user counts, this piece and many others would be full of charts and graphs that added a sense of intellectual heft to the analysis. There would be some annual presentation called the State of Social akin to Meeker's Internet Trends Report, or perhaps it would be a fifty page sub-section of her annual report.

Despite this, most of the social media networks we study generate much more social capital than actual financial capital, especially in their early stages; almost all such companies have internalized one of the popular truisms of Silicon Valley, that in the early days, companies should postpone revenue generation in favor of rapid network scaling. Social capital has much to say about why social networks lose heat, stall out, and sometimes disappear altogether. And, while we may not be able to quantify social capital, as highly attuned social creatures, we can feel it.

Social capital is, in many ways, a leading indicator of financial capital, and so its nature bears greater scrutiny. Not only is it good investment or business practice, but analyzing social capital dynamics can help to explain all sorts of online behavior that would otherwise seem irrational.

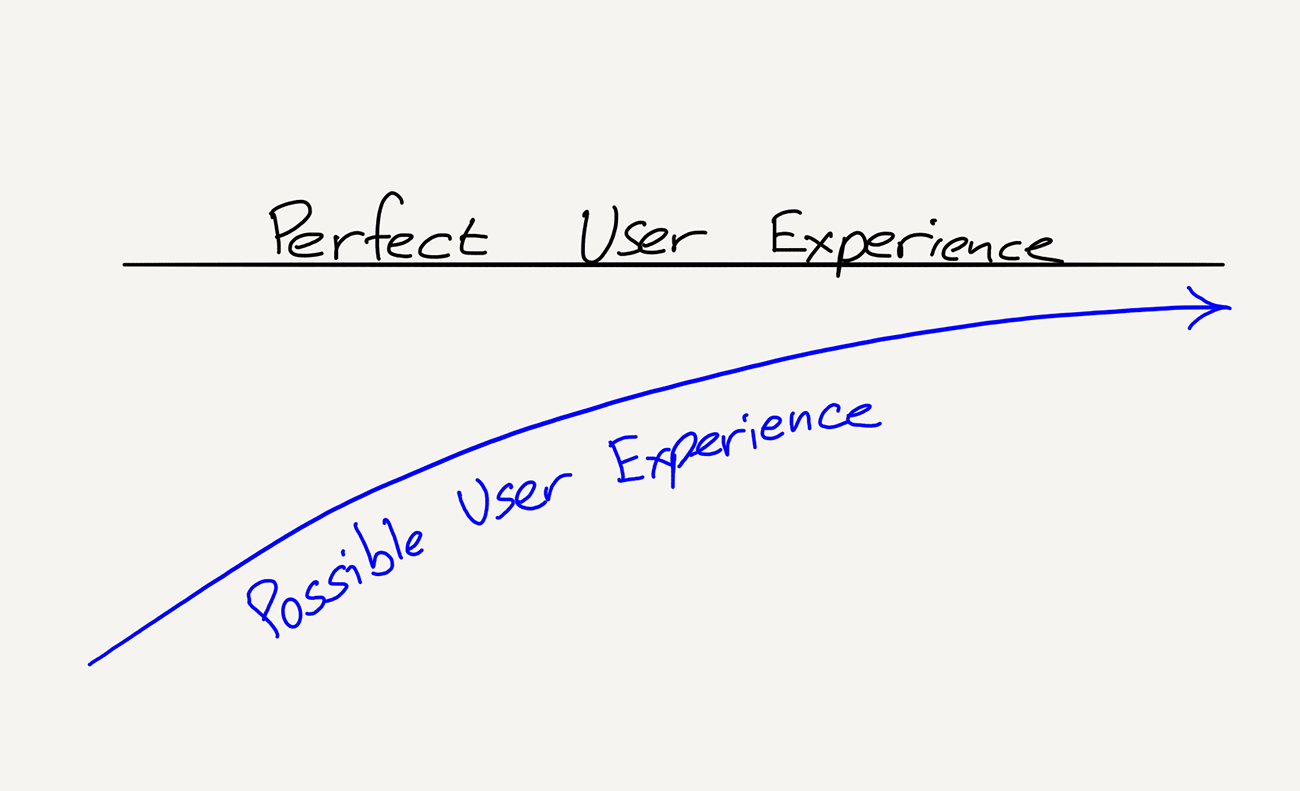

In the past few years, much progress has been made analyzing Software as a Service (SaaS) businesses. Not as much has been made on social networks. Analysis of social networks still strikes me as being like economic growth theory long before Paul Romer's paper on endogenous technological change. However, we can start to demystify social networks if we also think of them as SaaS businesses, but instead of software, they provide status. This post is a deep dive into what I refer to as Status as a Service (StaaS) businesses.

Think of this essay as a series of strongly held hypotheses; without access to the types of data which i’m not even sure exists, it’s difficult to be definitive. As ever, my wise readers will add or push back as they always do.

Traditional Network Effects Model of Social Networks

One of the fundamental lessons of successful social networks is that they must first appeal to people when they have few users. Typically this is done through some form of single-user utility.

This is the classic cold start problem of social. The answer to the traditional chicken-and-egg question is actually answerable: what comes first is a single chicken, and then another chicken, and then another chicken, and so on. The harder version of the question is why the first chicken came and stayed when no other chickens were around, and why the others followed.

The second fundamental lessons is that social networks must have strong network effects so that as more and more users come aboard, the network enters a positive flywheel of growth, a compounding value from positive network effects that leads to hockey stick growth that puts dollar signs in the eyes of investors and employees alike. "Come for the tool, stay for the network" wrote Chris Dixon, in perhaps the most memorable maxim for how this works.

Even before social networks, we had Metcalfe's Law on telecommunications networks:

The value of a telecommunications network is proportional to the square of the number of connected users of the system (n^2)

This ported over to social networks cleanly. It is intuitive, and it includes that tantalizing math formula that explains why growth curves for social networks bends up sharply at the ankle of the classic growth S-curve.

But dig deeper and many many questions remain. Why do some large social networks suddenly fade away, or lose out to new tiny networks? Why do some new social networks with great single-player tools fail to transform into networks, while others with seemingly frivolous purposes make the leap? Why do some networks sometimes lose value when they add more users? What determines why different networks stall out at different user base sizes? Why do some networks cross international borders easily while others stay locked within specific countries? Why, if Metcalfe's Law holds, do many of Facebook's clones of other social network features fail, while some succeed, like Instagram Stories?

What ties many of these explanations together is social capital theory, and how we analyze social networks should include a study of a social network's accumulation of social capital assets and the nature and structure of its status games. In other words, how do such companies capitalize, either consciously or not, on the fact that people are status-seeking monkeys, always trying to seek more of it in the most efficient way possible?

To paraphrase Nicki Minaj, “If I'm fake I ain't notice cause my followers ain't.”

[Editor’s note: sometimes the followers actually are fake.]

Utility vs. Social Capital Framework

Classic network effects theory still holds, I’m not discarding it. Instead, let's append some social capital theory. Together, those form the two axes on which I like to analyze social network health.

Actually, I tend to use three axes to dissect social networks.

The three axes on which I evaluate social network strength

For this post, though, I'm only going to look at two of them, utility and social capital, as the entertainment axis adds a whole lot of complexity which I'll perhaps explain another time.

The basic two axis framework guiding much of the social network analysis in this piece

Utility doesn't require much explanation, though we often use the term very loosely and categorize too many things as utility when they aren't that useful (we generally confuse circuses for bread and not the reverse; Fox News, for example, is more entertainment than utility, as is common of many news outlets). A social network like Facebook allows me to reach lots of people I would otherwise have a harder time tracking down, and that is useful. A messaging app like WhatsApp allows me to communicate with people all over the world without paying texting or incremental data fees, which is useful. Quora and Reddit and Discord and most every social network offer some forms of utility.

The other axis is, for a lack of a more precise term, the social capital axis, or the status axis. Can I use the social network to accumulate social capital? What forms? How is it measured? And how do I earn that status?

There are several different paths to success for social networks, but those which compete on the social capital axis are often more mysterious than pure utilities. Competition on raw utility tends to be Darwinian, ruthless, and highly legible. This is the world, for example, of communication services like messaging and video conferencing. Investing in this space also tends to be a bit more straightforward: how useful is your app or service, can you get distribution, etc. When investors send me decks on things in this category, I am happy to offer an opinion, but I enjoy puzzling over the world of artificial prestige even more.

The creation of a successful status game is so mysterious that it often smacks of alchemy. For that reason, entrepreneurs who succeed in this space are thought of us a sort of shaman, perhaps because most investors are middle-aged white men who are already so high status they haven't the first idea why people would seek virtual status (more on that later).

With the rise of Instagram, with its focus on photos and filters, and Snapchat, with its ephemeral messaging, and Vine, with its 6-second video limit, for a while there was a thought that new social networks would be built on some new modality of communications. That's a piece of it, but it's not the complete picture, and not for the reasons many people think, which is why we have seen a whole bunch of strange failed experiments in just about every odd combinations of features and filters and artificial constraints in how we communicate with each other through our phones. Remember Facebook's Snapchat competitor Slingshot, in which you had to unlock any messages you received by responding with a message? It felt like product design by mad libs.

When modeling how successful social networks create a status game worth playing, a useful metaphor is one of the trendiest technologies: cryptocurrency.

Social Networks as ICO's

How is a new social network analogous to an ICO?

Each new social network issues a new form of social capital, a token.

You must show proof of work to earn the token.

Over time it becomes harder and harder to mine new tokens on each social network, creating built-in scarcity.

Many people, especially older folks, scoff at both social networks and cryptocurrencies.

["Why does anyone care what you ate for lunch?" is the canonical retort about any social network, though it’s fading with time. Both social networks and ICO's tend to drive skeptics crazy because they seem to manufacture value out of nothing. The shifting nature of scarcity will always leave a wake of skepticism and disbelief.]

Years ago, I stayed at the house of a friend whose high school daughter was home upstairs with a classmates. As we adults drank wine in the kitchen downstairs while waiting for dinner to finish in the oven, we heard lots of music and stomping and giggling coming from upstairs.

When we finally called them down for dinner, I asked them what all the ruckus had been. My friend's daughter proudly held up her phone to show me a recording they'd posted to an app called Musical.ly. It was a lip synch and dance routine replete with their own choreography. They'd rehearsed the piece more times than they could count. It showed. Their faces were shiny with sweat, and they were still breathing hard from the exertion. Proof of work indeed.

I spent the rest of the dinner scrolling through the app, fascinated, interviewing the girls about what they liked about the app, why they were on it, what share of their free time it had captured. I can't tell if parents are offended or glad when I spend much of the time visiting them interviewing their sons and daughters instead, but in the absence of good enough metrics with which to analyze this space, I subscribe to the Jane Goodall theory of how to study your subject. Besides, status games of adults are already well covered by the existing media, from literature to film. Children's status games, once familiar to us, begin to fade from our memory as time passes, and its modern forms have been drastically altered by social media.

Other examples abound. Perhaps you've read a long and thoughtful response by a random person on Quora or Reddit, or watched YouTube vloggers publishing night after night, or heard about popular Vine stars living in houses together, helping each other shoot and edit 6-second videos. While you can outsource Bitcoin mining to a computer, people still mine for social capital on social networks largely through their own blood, sweat, and tears.

[Aside: if you yourself are not an aspiring social network star, living with one is...not recommended.]

Perhaps, if you've spent time around today's youth, you've watched with a mixture of horror and fascination as a teen snaps dozens of selfies before publishing the most flattering one to Instagram, only to pull it down if it doesn't accumulate enough likes within the first hour. It’s another example of proof of work, or at least vigorous market research.

Almost every social network of note had an early signature proof of work hurdle. For Facebook it was posting some witty text-based status update. For Instagram, it was posting an interesting square photo. For Vine, an entertaining 6-second video. For Twitter, it was writing an amusing bit of text of 140 characters or fewer. Pinterest? Pinning a compelling photo. You can likely derive the proof of work for other networks like Quora and Reddit and Twitch and so on. Successful social networks don't pose trick questions at the start, it’s usually clear what they want from you.

[An aside about exogenous social capital: you might complain that your tweets are more interesting and grammatical than those of, say, Donald Trump (you're probably right!). Or that your photos are better composed and more interesting at a deep level of photographic craft than those of Kim Kardashian. The difference is, they bring a massive supply of exogenous pre-existing social capital from another status game, the fame game, to every table, and some forms of social capital transfer quite well across platforms. Generalized fame is one of them. More specific forms of fame or talent might not retain their value as easily: you might follow Paul Krugman on Twitter, for example, but not have any interest in his Instagram account. I don't know if he has one, but I probably wouldn't follow it if he did, sorry Paul, it’s nothing personal.]

If you've ever joined one of these social networks early enough, you know that, on a relative basis, getting ahead of others in terms of social capital (followers, likes, etc.) is easier in the early days. Some people who were featured on recommended follower lists in the early days of Twitter have follower counts in the 7-figures, just as early masters of Musical.ly and Vine were accumulated massive and compounding follower counts. The more people who follow you, the more followers you gain because of leaderboards and recommended follower algorithms and other such common discovery mechanisms.

It's true that as more people join a network, more social capital is up for grabs in the aggregate. However, in general, if you come to a social network later, unless you bring incredible exogenous social capital (Taylor Swift can join any social network on the planet and collect a massive following immediately), the competition for attention is going to be more intense than it was in the beginning. Everyone has more of an understanding of how the game works so the competition is stiffer.

Why Proof of Work Matters

Why does proof of work matter for a social network? If people want to maximize social capital, why not make that as easy as possible?

As with cryptocurrency, if it were so easy, it wouldn't be worth anything. Value is tied to scarcity, and scarcity on social networks derives from proof of work. Status isn't worth much if there's no skill and effort required to mine it. It's not that a social network that makes it easy for lots of users to perform well can't be a useful one, but competition for relative status still motivates humans. Recall our first tenet: humans are status-seeking monkeys. Status is a relative ladder. By definition, if everyone can achieve a certain type of status, it’s no status at all, it’s a participation trophy.

Musical.ly created a hurdle for gaining followers and status that wasn't easily cleared by many people. However, for some, especially teens, and especially girls, it was a status game at which they were particularly suited to win. And so they flocked there, because, according to my second tenet, people look for the most efficient ways to accumulate the most social capital.

Recall Twitter in the early days, when it was somewhat of a harmless but somewhat inert status update service. I went back to look at my first few tweets on the service from some 12 years ago and my first two, spaced about a year apart, were both about doing my taxes. Looking back at them, I bore even myself. Early Twitter consisted mostly of harmless but dull life status updates, a lot of “is this thing on?” tapping on the virtual microphone. I guess I am in the camp of not caring about what you had for lunch after all. Get off my lawn, err, phone screen!

What changed Twitter, for me, was the launch of Favstar and Favrd (both now defunct, ruthlessly murdered by Twitter), these global leaderboards that suddenly turned the service into a competition to compose the most globally popular tweets. Recall, the Twitter graph was not as dense then as it was now, nor did distribution accelerants like one-click retweeting and Moments exist yet.

What Favstar and Favrd did was surface really great tweets and rank them on a scoreboard, and that, to me, launched the performative revolution in Twitter. It added needed feedback to the feedback loop, birthing a new type of comedian, the master of the 140 character or less punchline (the internet has killed the joke, humor is all punchline now that the setup of the joke is assumed to be common knowledge thanks to Google).

The launch of these global tweet scoreboards reminds me of the moment in the now classic film** Battle Royale when Beat Takeshi Kitano informs a bunch of troublemaking school kids that they’ve been deported to an island are to fight to the death, last student standing wins, and that those who try to sneak out of designated battle zones will be killed by explosive collars. I'm not saying that Twitter is a life-or-death struggle, but you need only time travel back to pre-product-market-fit Twitter to see the vast difference in tone.

**Now classic because Battle Royale has subsequently been ripped off, err, paid tribute to by The Hunger Games, Fortnite, Maze Runner, and just about every YA franchise out there because who understands barbarous status games better than teenagers?

Favstar.fm screenshot. Just seeing some of those old but familiar avatars makes me sentimental, perhaps like how early Burning Man devotees think back on its early years, before the moneyed class came in and ruined that utopia of drugs, nudity, and art.

Chasing down old Favrd screenshots, I still laugh at the tweets surfaced.

One more Favrd screenshot just for old time’s sake

It's critical that not everyone can quip with such skill. This gave Twitter its own proof of work, and over time the overall quality of tweets improved as that feedback loop spun and tightened. The strategies that gained the most likes were fed in increasing volume into people's timelines as everyone learned from and competed with each other.

Read Twitter today and hardly any of the tweets are the mundane life updates of its awkward pre-puberty years. We are now in late-stage performative Twitter, where nearly every tweet is hungry as hell for favorites and retweets, and everyone is a trained pundit or comedian. It's hot takes and cool proverbs all the way down. The harmless status update Twitter was a less thirsty scene but also not much of a business. Still, sometimes I miss the halcyon days when not every tweet was a thirst trap. I hate the new Kanye, the bad mood Kanye, the always rude Kanye, spaz in the news Kanye, I miss the sweet Kanye, chop up the beats Kanye.

Thirst for status is potential energy. It is the lifeblood of a Status as a Service business. To succeed at carving out unique space in the market, social networks offer their own unique form of status token, earned through some distinctive proof of work.

Conversely, let's look at something like Prisma, a photo filter app which tried to pivot to become a social network. Prisma surged in popularity upon launch by making it trivial to turn one of your photos into a fine art painting with one of its many neural-network-powered filters.

It worked well. Too well.

Since almost any photo could, with one-click, be turned into a gorgeous painting, no single photo really stands out. The star is the filter, not the user, and so it didn't really make sense to follow any one person over any other person. Without that element of skill, no framework for a status game or skill-based network existed. It was a utility that failed at becoming a Status as a Service business.

In contrast, while Instagram filters, in its earliest days, improved upon the somewhat limited quality of smartphone photos at the time, the quality of those photos still depended for the most part on the photographer. The composition, the selection of subject matter, these still derived from the photographer’s craft, and no filter could elevate a poor photo into a masterpiece.

So, to answer an earlier question about how a new social network takes hold, let’s add this: a new Status as a Service business must devise some proof of work that depends on some actual skill to differentiate among users. If it does, then it creates, like an ICO, some new form of social capital currency of value to those users.

This is not the only way a social network can achieve success. As noted before, you can build a network based around utility or entertainment. However, the addition of status helps us to explain why some networks which seemingly offer little in the way of meaningful utility (is a service that forces you to make only a six second video useful?) still achieve traction.

Facebook's Original Proof of Work

You might wonder, how did Facebook differentiate itself from MySpace? It started out as mostly a bunch of text status updates, nothing necessarily that innovative.

In fact, Facebook launched with one of the most famous proof of work hurdles in the world: you had to be a student at Harvard. By requiring a harvard.edu email address, Facebook drafted off of one of the most elite cultural filters in the world. It's hard to think of many more powerful slingshots of elitism.

By rolling out, first to Ivy League schools, then to colleges in general, Facebook scaled while maintaining a narrow age dispersion and exclusivity based around educational credentials.

Layer that on top of the broader social status game of stalking attractive members of the other sex that animates much of college life and Facebook was a service that tapped into reserves of some of the most heated social capital competitions in the world.

Social Capital ROI

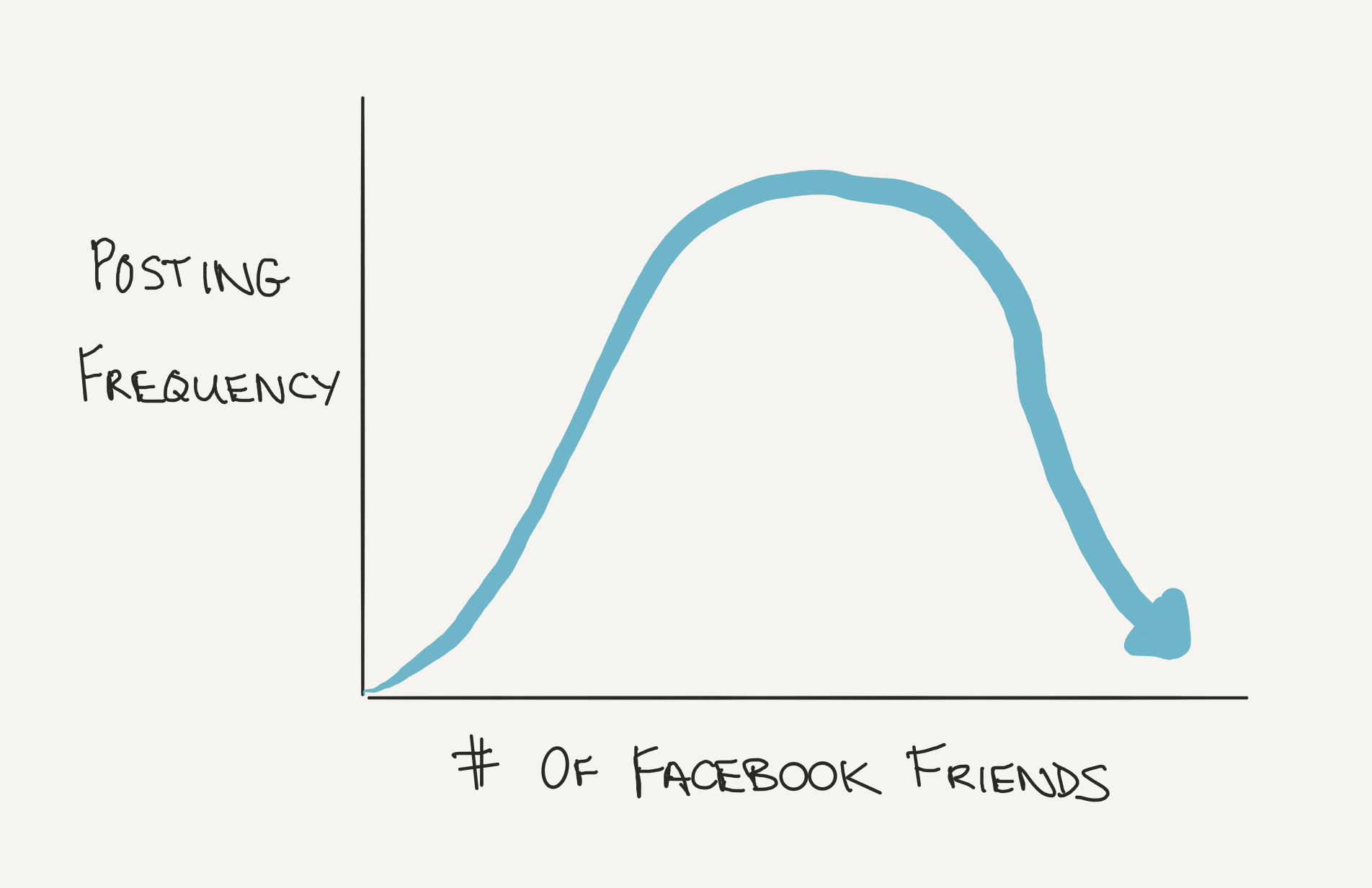

If a person posts something interesting to a platform, how quickly do they gain likes and comments and reactions and followers? The second tenet is that people seek out the most efficient path to maximize their social capital. To do so, they must have a sense for how different strategies vary in effectiveness. Most humans seem to excel at this.

Young people, with their much higher usage rate on social media, are the most sensitive and attuned demographic to the payback period and ROI on their social media labor. So, for example, young people tend not to like Twitter but do enjoy Instagram.

It's not that Twitter doesn't dole out the occasional viral supernova; every so often someone composes a tweet that goes over 1K and then 10K likes or retweets (Twitter should allow people to buy a framed print of said tweet with a silver or gold 1K club or 10K club designation to supplement its monetization). But it’s not common, and most tweets are barely seen by anyone at all. Pair that with the fact that young people's bias towards and skill advantage in visual mediums over textual ones and it's not surprising Instagram is their social battleground of preference (video games might be the most lucrative battleground for the young if you broaden your definition of social networks, and that's entirely reasonable, though that arena skews male).

Instagram, despite not having any official reshare option, allows near unlimited hashtag spamming, and that allows for more deterministic, self-generated distribution. Twitter also isn't as great for spreading visual memes because of its stubborn attachment to cropping photos to maintain a certain level of tweet density per phone screen.

The gradient of your network's social capital ROI can often govern your market share among different demographics. Young girls flocked to Musical.ly in its early days because they were uniquely good at the lip synch dance routine videos that were its bread and butter. In this age of neverending notifications, heavy social media users are hyper aware of differing status ROI among the apps they use.

I can still remember posting the same photos to Flickr and Instagram for a while and seeing how quickly the latter passed the former in feedback. If I were an investor or even an employee, I might have something like a representative basket of content that I'd post from various test accounts on different social media networks just to track social capital interest rates and liquidity among the various services.

Some features can increase the reach of content on any network. A reshare option like the retweet button is a massive accelerant of virality on apps where the social graph determines what makes it into the feed. In an effort to increase engagement, Twitter has, over the years, become more and more aggressive to increase the liquidity of tweets. It now displays tweets that were liked by people you follow, even if they didn't retweet them, and it has populated its search tab with Moments, which, like Instagram's Discover Tab, guesses at other content you might like and provides an endless scroll filled with it.

TikTok is an interesting new player in social media because its default feed, For You, relies on a machine learning algorithm to determine what each user sees; the feed of content from by creators you follow, in contrast, is hidden one pane over. If you are new to TikTok and have just uploaded a great video, the selection algorithm promises to distribute your post much more quickly than if you were on sharing it on a network that relies on the size of your following, which most people have to build up over a long period of time. Conversely, if you come up with one great video but the rest of your work is mediocre, you can't count on continued distribution on TikTok since your followers live mostly in a feed driven by the TikTok algorithm, not their follow graph.

The result is a feedback loop that is much more tightly wound that that of other social networks, both in the positive and negative direction. Theoretically, if the algorithm is accurate, the content in your feed should correlate most closely to quality of the work and its alignment with your personal interests rather than the drawing from the work of accounts you follow. At a time when Bytedance is spending tens (hundreds?) of millions of marketing dollars in a bid to acquire users in international markets, the rapid ROI on new creators' work is a helpful quality in ensuring they stick around.

This development is interesting for another reason: graph-based social capital allocation mechanisms can suffer from runaway winner-take-all effects. In essence, some networks reward those who gain a lot of followers early on with so much added exposure that they continue to gain more followers than other users, regardless of whether they've earned it through the quality of their posts. One hypothesis on why social networks tend to lose heat at scale is that this type of old money can't be cleared out, and new money loses the incentive to play the game.

One of the striking things about Silicon Valley as a region versus East Coast power corridors like Manhattan is its dearth of old money. There are exceptions, but most of the fortunes in the Bay Area are not just new money but freshly minted new money from this current generation of tech. You have some old VC or semiconductor industry fortunes, but most of those people are still alive.

It's in NYC that you run into multi-generational old money hanging around on the Upper East or West sides of Manhattan, or encounter old wealth being showered around town by young socialites whose source of wealth is simply a fortuitous last name. Trickle down economics works, but often just down the veins of family trees.

It's not that the existence of old money or old social capital dooms a social network to inevitable stagnation, but a social network should continue to prioritize distribution for the best content, whatever the definition of quality, regardless of the vintage of user producing it. Otherwise a form of social capital inequality sets in, and in the virtual world, where exit costs are much lower than in the real world, new users can easily leave for a new network where their work is more properly rewarded and where status mobility is higher.

It may be that Silicon Valley never comes to be dominated by old money, and I'd consider that a net positive for the region. I'd rather the most productive new work be rewarded consistently by the marketplace than a bunch of stagnant quasi-monopolies hang on to wealth as they reach bloated scales that aren't conducive to innovation. The same applies to social networks and multi-player video games. As a newbie, how quickly, if you put in the work, are you "in the game"? Proof of work should define its own meritocracy.

The same way many social networks track keystone metrics like time to X followers, they should track the ROI on posts for new users. It's likely a leading metric that governs retention or churn. It’s useful as an investor, or even as a curious onlooker to test a social networks by posting varied content from test accounts to gauge the efficiency and fairness of the distribution algorithm.

Whatever the mechanisms, social networks must devote a lot of resources to market making between content and the right audience for that content so that users feel sufficient return on their work. Distribution is king, even when, or especially when it allocates social capital.

Why copying proof of work is lousy strategy for status-driven networks

We often see a new social network copy a successful incumbent but with a minor twist thrown in. In the wake of Facebook’s recent issues, we may see some privacy-first social networks, but we have an endless supply of actual knockoffs to study. App.net and then Mastodon were two prominent Twitter clones that promised some differentiation but which built themselves on the same general open messaging framework.

Most of these near clones have and will fail. The reason that matching the basic proof of work hurdle of an Status as a Service incumbent fails is that it generally duplicates the status game that already exists. By definition, if the proof of work is the same, you're not really creating a new status ladder game, and so there isn't a real compelling reason to switch when the new network really has no one in it.

This isn't to say you can't copy an existing proof of work and succeed. After all, Facebook replaced social networks like MySpace and Friendster that came before it, and in the real world, new money sometimes becomes the new old money. You can build a better status game or create a more valuable form of status. Usually when such displacement occurs, though, it does so along the other dimension of pure utility.

For example, we have multiple messaging apps that became viable companies just by capturing a particular geographic market through localized network effects. We don't have one messaging app to rule them all in the world, but instead a bunch that have won in particular geographies. After all, the best messaging app in most countries or continents is the one most other people are already using there.

But in the same market? Copying a proof of work there is a tough road. The first mover advantage is also such that the leader with the dominant graph and the social capital of most value can look at any new features that fast followers launch and pull a reverse copy, grafting them into their more extensive and dominant incumbent graph.

In China, Tencent is desperate to cool off Bytedance's momentum in the short video space; Douyin is enemy number one. Tencent launched a clone but added a feature which allowed viewers to record a side-by-side video reaction in response to any video. It took about half a second for Bytedance to incorporate that into Douyin, and now it's a popular feature in TikTok the world over. If you can't change the proof of work competition as a challenger, copy and throttle is an effective strategy for the incumbent.

Not to mention that a wholesale ripoff of another app tends to be frowned upon as poor form. Even in China, with its reputation as the land of loose IP protection, users will tend to post dismissive reviews of blatant copycat apps in app stores. Chinese users may not be as aware of American apps that are knocked off in China, but within China, users don't just jump ship to out-and-out copycat apps. There has to be an incentive to overcome the switching costs, and that applies in China as it does elsewhere.

A few specifics of note here. I once wrote about social networks that the network's the thing; that is, the composition of the graph once a social network reaches scale is its most unique quality. I would update that today to say that it’s the unique combination of a feature and a specific graph that is any network’s most critical competitive advantage. Copying some network's feature often isn’t sufficient if you can’t also copy its graph, but if you can apply the feature to some unique graph that you earned some other way, it can be a defensible advantage.

Nothing illustrates this better than Facebook's attempts to win back the young from Snapchat by copying some of the network's ephemeral messaging features, or Facebook's attempt to copy TikTok with Lasso, or, well Facebook's attempt to duplicate just about every social app with any traction anywhere. The problem with copying Snapchat is that, well, the reason young people left Facebook for Snapchat was in large part because their parents had invaded Facebook. You don't leave a party with your classmates to go back to one your parents are throwing just because your dad brings in a keg and offer to play beer pong.

The pairing of Facebook's gigantic graph with just about almost any proof of work from another app changes the very nature of that status game, sometimes in undesirable ways. Do you really want your coworkers and business colleagues and family and friends watching you lip synch to "It's Getting Hot in Here" by Nelly on Lasso? Facebook was rumored to be contemplating a special memes tab to try to woo back the young, which, again, completely misunderstands how the young play the meme status game. At last check that plan had been shelved.

Of course, the canonical Facebook feature grab that pundits often cite as having worked is Instagram's copy of Snapchat's Stories format. As I've written before, I think the Stories format is a genuine innovation on the social modesty problem of social networks. That is, all but the most egregious showoffs feel squeamish about publishing too much to their followers. Stories, by putting the onus on the viewer to pull that content, allows everyone to publish away guilt-free, without regard for the craft that regular posts demand in the ever escalating game that is life publishing. In a world where algorithmic feeds break up your sequence of posts, Stories also allow gifted creators to create sequential narratives.

Thus Stories is inherently about lowering the publishing hurdle for users and about a new method of storytelling, and any multi-sided network seeing declining growth will try grafting it on their own network at some point just to see if it solves supply-side social modesty.

Ironically, as services add more and more filters and capabilities into their story functionality, we see the proof of work game in Stories escalating. Many of the Instagram Stories today are more elaborate and time-consuming to publish than regular posts; the variety of filters and stickers and GIFs and other tools in the Stories composer dwarfs the limited filters available for regular Instagram posts. What began as a lighter weight posting format is now a more sophisticated and complex one.

You can take the monkey out of the status-seeking game, but you can't take the status-seeking out of the monkey.

The Greatest Social Capital Creation Event in Tech History

In the annals of tech, and perhaps the world, the event that created the greatest social capital boom in history was the launch of Facebook's News Feed.

Before News Feed, if you were on, say MySpace, or even on a Facebook before News Feed launched, you had to browse around to find all the activity in your network. Only a demographic of a particular age will recall having to click from one profile to another on MySpace while stalking one’s friends. It almost seems comical in hindsight, that we'd impose such a heavy UI burden on social media users. Can you imagine if, to see all the new photos posted in your Instagram network, you had to click through each profile one by one to see if they’d posted any new photos? I feel like my parents talking about how they had to walk miles to grade school through winter snow wearing moccasins of tree bark when I complain about the undue burden of social media browsing before the News Feed, but it truly was a monumental pain in the ass.

By merging all updates from all the accounts you followed into a single continuous surface and having that serve as the default screen, Facebook News Feed simultaneously increased the efficiency of distribution of new posts and pitted all such posts against each other in what was effectively a single giant attention arena, complete with live updating scoreboards on each post. It was as if the panopticon inverted itself overnight, as if a giant spotlight turned on and suddenly all of us performing on Facebook for approval realized we were all in the same auditorium, on one large, connected infinite stage, singing karaoke to the same audience at the same time.

It's difficult to overstate what a momentous sea change it was for hundreds of millions, and eventually billions, of humans who had grown up competing for status in small tribes, to suddenly be dropped into a talent show competing against EVERY PERSON THEY HAD EVER MET.

Predictably, everything exploded. The number of posts increased. The engagement with said posts increased. This is the scene in a movie in which, having launched something, a bunch of people stand in a large open war room waiting, and suddenly a geek staring at a computer goes wide-eyed, exclaiming, "Oh my god." And then the senior ranking officer in the room (probably played by a scowling Ed Harris or Kyle Chandler) walks over to look at the screen, where some visible counter is incrementing so rapidly that the absolute number of digits starts is incrementing in real time as you look at it, because films have to make a plot development like this brain dead obvious to the audience. And then the room erupts in cheers while different people hug and clap each others on the back, and one random extra sprints across the screen in the background, shaking a bottle of champagne that explodes and ejaculates a stream of frothy bubbly through the air like some capitalist money shot that inspires, later, a 2,000 word essay from Žižek.

Of course, users complained about News Feed at first, but their behavior belied their words, something that would come to haunt Facebook later when it took it as proof that users would always just cry wolf and that similar changes in the future would be the right move regardless of public objections.

Back in those more halcyon times, though, News Feed unleashed a gold rush for social capital accumulation. Wow, that post over there has ten times the likes that my latest does! Okay, what can I learn from it to use in my next post? Which of my content is driving the most likes? We talk about the miracles of machine learning in the modern age, but as social creatures, humans are no less remarkable in their ability to decipher and internalize what plays well to the peanut gallery.

Stories of teens A/B testing Instagram posts, yanking those which don't earn enough likes in the first hour, are almost beyond satire; a show like Black Mirror often just resorts to episodes that show things that have already happened in reality. The key component of the 10,000 hour rule of expertise is the idea of deliberate practice, the type that provides immediate feedback. Social media may not be literally real-time in its feedback, but it's close enough, and the scope of reach is magnitudes of order beyond that of any social performance arena in history. We have a generation now that has been trained through hundreds of thousands, perhaps millions of social media reps on what engages people on which platforms. In our own way, we are all Buzzfeed. We are all Kardashians.

The tighter the feedback loop, the quicker the adaptation. Compare early Twitter to modern Twitter; it's like going from listening to your coworkers at a karaoke bar to watching Beyonce play Coachella. I wrote once that any Twitter account that gained enough followers would end up sounding like a fortune cookie, but I underestimated how quickly everyone would arrive at that end state.

As people start following more and more accounts on a social network, they reach a point where the number of candidate stories exceeds their capacity to see them all. Even before that point, the sheer signal-to-noise ratio may decline to the point that it affects engagement. Almost any network that hits this inflection point turns to the same solution: an algorithmic feed.

Remember, status derives value from some type of scarcity. What is the one fundamental scarcity in the age of abundance? User attention. The launch of an algorithmic feed raises the stakes of the social media game. Even if someone follows you, they might no longer see every one of your posts. As DiCaprio said in Django Unchained, “You had my curiosity, but now, under the algorithmic feed, you have to earn my attention.”

As humans, we intuitively understand that some galling percentage of our happiness with our own status is relative. What matters is less our absolute status than how are we doing compared to those around us. By taking the scope of our status competitions virtual, we scaled them up in a way that we weren't entirely prepared for. Is it any surprise that seeing other people signaling so hard about how wonderful their lives are decreases our happiness?

As evidence of how anomalous a change this has been for humanity, witness how many celebrities continue to be caught with a history of offensive social media posts that should obviously have been taken down long ago given shifting sensibilities? Kevin Hart, baseball players like Josh Hader, Trea Turner, and Sean Newcomb, and a litany of other public figures and their management teams didn't think to go back and scrub some of their earlier social media posts despite nothing but downside optionality.

Could social networks have chosen to keep likes and other such metrics about posts private, visible only to the recipient? Could we have kept this social capital arms race from escalating? Some tech CEO's now look back and, like Alan Greenspan, bemoan the irrational exuberance that led us to where we are now, but let's be honest, the incentives to lower interest rates on social capital in all these networks, given their goals and those of their investors, were just too great. If one company hadn’t flooded the market with status, others would have filled the void many times over.

A social network like Path attempted to limit your social graph size to the Dunbar number, capping your social capital accumulation potential and capping the distribution of your posts. The exchange, they hoped, was some greater transparency, more genuine self-expression. The anti-Facebook. Unfortunately, as social capital theory might predict, Path did indeed succeed in becoming the anti-Facebook: a network without enough users. Some businesses work best at scale, and if you believe that people want to accumulate social capital as efficiently as possible, putting a bound on how much they can earn is a challenging business model, as dark as that may be.

Why Social Capital Accumulation Skews Young

I'd love to see a graph of social capital assets under management by user demographic. I'd wager that we'd see that young people, especially those from their teens, when kids seem to be given their first cell phones, through early 20's, are those who dominate the game. My nephew can post a photo of his elbow on Instagram and accumulate a couple hundred likes; I could share a photo of myself in a conga line with Barack Obama and Beyonce while Jennifer Lawrence sits on my shoulders pouring Cristal over my head and still only muster a fraction of the likes my nephew does posting a photo of his elbow. It's a young person's game, and the Livejournal/Blogger/Flickr/Friendster/MySpace era in which I came of age feels like the precambrian era of social in comparison.

While we're all status-seeking monkeys, young people tend to be the tip of the spear when it comes to catapulting new Status as a Service businesses, and may always will be. A brief aside here on why this tends to hold.

One is that older people tend to have built up more stores of social capital. A job title, a spouse, maybe children, often a house or some piece of real estate, maybe a car, furniture that doesn't require you to assemble it on your own, a curriculum vitae, one or more college degrees, and so on.

[This differs by culture, of course. In the U.S., where I grew up, one’s job is the single most important status carrier which is why so many conversations there begin with “What do you do?”]

Young people are generally social capital poor unless they've lucked into a fat inheritance. They have no job title, they may not have finished college, they own few assets like homes and cars, and often if they've finished college they're saddled with substantial school debt. For them, the fastest and most efficient path to gaining social capital, while they wait to level up enough to win at more grown-up games like office politics, is to ply their trade on social media (or video games, but that’s a topic for another day).

Secondly, because of their previously accumulated social capital, adults tend to have more efficient means of accumulating even more status than playing around online. Maintenance of existing social capital stores is often a more efficient use of time than fighting to earn more on a new social network given the ease of just earning interest on your sizeable status reserves. That's just math, especially once you factor in loss aversion.

Young people look at so many of the status games of older folks—what brand of car is parked in your garage, what neighborhood can you afford to live in, how many levels below CEO are you in your org—and then look at apps like Vine and Musical.ly, and they choose the only real viable and thus optimal path before them. Remember the second tenet: people maximize their social capital the most efficient way possible. Both the young and old pursue optimal strategies.

That so much social capital for the young comes in the form of followers, likes, and comments from peers and strangers shouldn't lessen its value. Think back to your teen years and try to recall any real social capital that you could accumulate on such a scale. In your youth, the approval of peers and others in your demographic tend to matter more than just about anything, and social media has extended the reach of the youth status game in just about every direction possible.

Furthermore, old people tend to be hesitant about mastering new skills in general, including new status games, especially if they involve bewildering new technology. There are many reasons, including having to worry about raising children and other such adult responsibilities and just plain old decay in neural malleability. Perhaps old dogs don't learn new tricks because they are closer to death, and the period to earn a positive return on that investment is shorter. At some point, it's not worth learning any new tricks at all, and we all turn into the brusque old lady in every TV show, e.g. Maggie Smith in Downton Abbey, dropping withering quips about the follies of humanity all about us. I look forward to this period of my life when, through the unavoidable spectre of mortality, I will naturally settle into my DGAF phase of courageous truth-telling.

Lastly, young people have a surplus of something which most adults always complain they have too little of: time. The hurdle rate on the time of the young is low, and so they can afford to spend some of that surplus exploring new social networks, mining them to see if the social capital returns are attractive, whereas most adults can afford to wait until a network has runaway product-market fit to jump in. The young respond to all the status games of the world with a consistent refrain: "If you are looking for ransom I can tell you I don't have money, but what I do have are a very particular set of skills. Among those are the dexterity and coordination to lip synch to songs while dancing Blocboy JB's Shoot in my bedroom, and the time to do it over and over again until I nail it" (I wrote this long before recent events in which Liam Neeson lit much of his social capital on fire, vacating the “wronged and vengeful father with incredible combat and firearms skills” role to the next aging male star).

These modern forms of social capital are like new money. Not surprisingly, then, older folks, who are worse at accumulating these new badges than the young, often scoff at those kids wasting time on those apps, just as old money from the Upper West and Upper East Sides of New York look down their noses at those hoodie-wearing new money billionaire philistines of Silicon Valley.

The exception might be those who grew up in this first golden age of social media. For some of this generation’s younger NBA players, who were on Instagram from the time they got their first phone, posting may be second nature, a force of habit they bring with them into the league. Witness how many young NBA stars track their own appearances on House of Highlights the way stars of old hoped looked for themselves on Sportscenter.

If this generational divide on social media between the old and the young was simply a one-time anomaly given the recent birth of social networks, and if future generations will be virtual status-seeking experts for womb to tomb, then capturing users in their formative social media years becomes even more critical for social networks.

“I contain multitudes” (said the youngblood)

Incidentally, teens and twenty-somethings, more so than the middle-aged and elderly, tend to juggle more identities. In middle and high school, kids have to maintain an identity among classmates at school, then another identity at home with family. Twenty-somethings craft one identity among coworkers during the day, then another among their friends outside of work. Often those spheres have differing status games, and there is some penalty to merging those identities. Anyone who has ever sent a text meant for their schoolmates to their parents, or emailed a boss or coworker something meant for their happy hour crew knows the treacherous nature of context collapse.

Add to that this younger generation's preference for and facility with visual communication and it's clearly why the preferred social network of the young is Instagram and the preferred messenger Snapchat, both preferable to Facebook. Instagram because of the ease of creating multiple accounts to match one's portfolio of identities, Snapchat for its best in class ease of visual messaging privately to particular recipients. The expiration of content, whether explicitly executed on Instagram (you can easily kill off a meme account after you've outgrown it, for example), or automatically handled on a service like Snapchat, is a must-have feature for those for whom multiple identity management is a fact of life.

Facebook, with its explicit attachment to the real world graph and its enforcement of a single public identity, is just a poor structural fit for the more complex social capital requirements of the young.

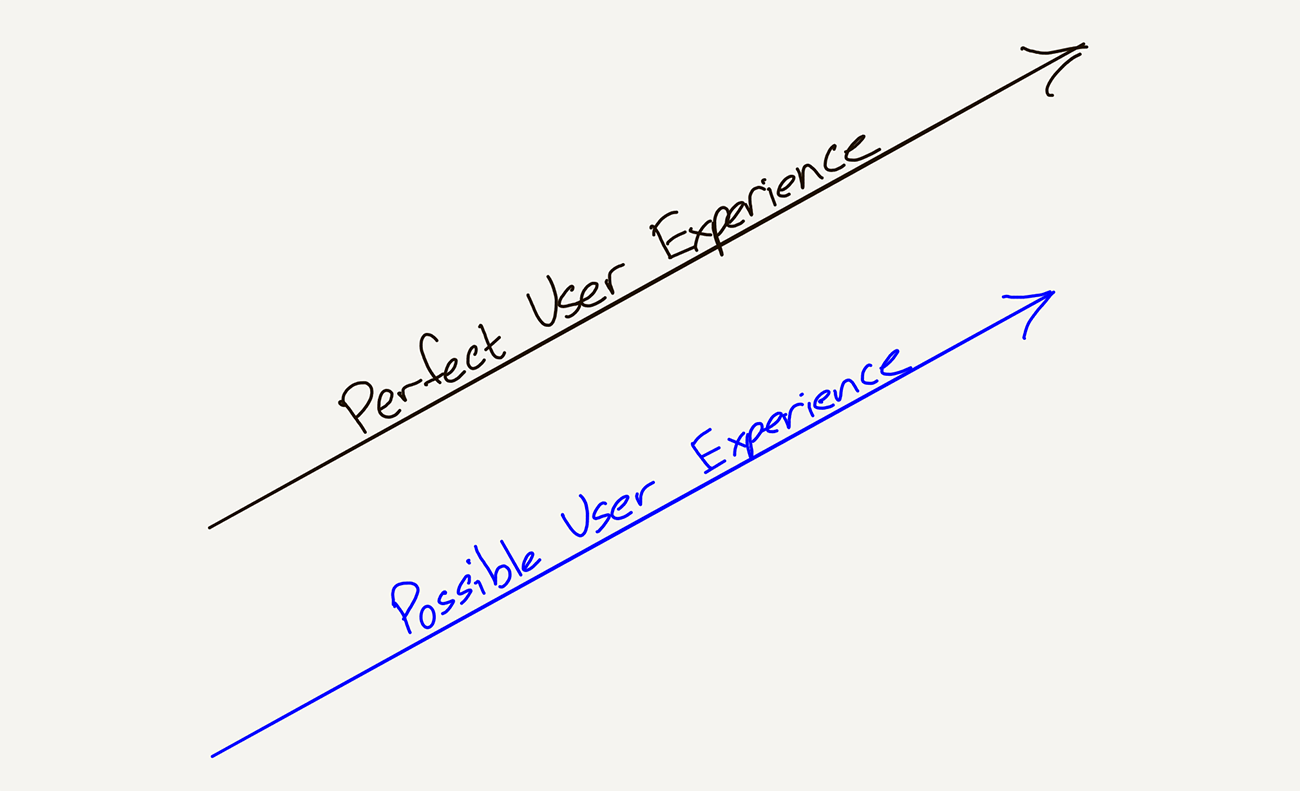

Common Social Network Arcs

It's useful to look at some of the common paths that social networks traverse over time using our two axis model. Not all of them took the same paths to prominence. Doing so also helps illuminate the most productive strategies for each to pursue future growth.

First utility, then social capital

Come for the tool, stay for the network

This is the well-known “come for the tool, stay for the network” path. Instagram is a good example here given its growth from filter-driven utility to social photo sharing behemoth. Today, I can't remember the last time I used an Instagram filter.

In the end, I think most social networks, if they've made this journey, need to make a return to utility to be truly durable. Commerce is just one area where Instagram can add more utility for its users.

First social capital, then utility

Lots of the internet’s great resources were built off people seeking a hit of fame and recognition

Come for the fame, stay for the tool?

Foursquare was this for me. In the beginning, I checked in to try to win mayorships at random places. These days, Foursquare is trying to become more of a utility, with information on places around you, rather than just a quirky distributed social capital game. Heavier users may have thoughts on how successful that has been, but in just compiling a database of locations that other apps can build off of, they have built up a store of utility.

IMDb, Wikipedia, Reddit, and Quora are more prominent examples here. Users come for the status, and help to build a tool for the commons.

Utility, but no social capital

Plenty of huge social apps are almost entirely utilitarian, but it’s a brutally competitive quadrant

Some companies manage to create utility for a network but never succeed at building any real social capital of note (or don’t even bother to try).

Most messaging apps fall into this category. They help me to reach people I already know, but they don't introduce me to too many new people, and they aren't really status games with likes and follows. Skype, Zoom, FaceTime, Google Hangouts, Viber, and Marco Polo are examples of video chat apps that fit this category as well. While some messaging apps are trying to add features like Stories that start to veer into the more performative realm of traditional social media, I’m skeptical they’ll ever see traction doing so when compared to apps that are more pure Status as a Service apps like Instagram.

This bottom right quadrant is home to some businesses with over a billion users, but in minimizing social capital and competing purely on utility-derived network effects, this tends to be a brutally competitive battleground where even the slimmest moat is fought for with blood and sweat, especially in the digital world where useful features are trivial to copy.

Social capital, but little utility

When a social network loses heat before it has built utility, the fall can come as quickly as the rise

One could argue Foursquare actually lands here, but the most interesting company to debate in this quadrant is clearly Facebook. I'm not arguing that Facebook doesn't have utility, because clearly it does in some obvious ways. In some markets, it is the internet. Messenger is clearly a useful messaging utility for a over a billion people.

However, the U.S. is a critical market for Facebook, especially when it comes to monetization, and so it's worth wondering how things might differ for Facebook today if it had succeeded in pushing further out on the utility axis. Many people I know have just dropped Facebook from their lives this past year with little impact on their day-to-day lives. Among the obvious and largest utility categories, like commerce or payments, Facebook isn't a top tier player in any except advertising.

This comparison is especially stark if we compare it to the social network to which it's most often contrasted.

Both social capital and utility simultaneously

The holy grail for social networks is to generate so much social capital and utility that it ends up in that desirable upper right quadrant of the 2x2 matrix. Most social networks will offer some mix of both, but none more so than WeChat.

While I hear of people abandoning Facebook and never looking back, I can't think of anyone in China who has just gone cold turkey on WeChat. It's testament to how much of an embedded utility WeChat has become that to delete it would be a massive inconvenience for most citizens.

Just look at the list of services in the WeChat or WePay or AliPay menu for the typical Chinese user and consider that Facebook isn’t a payment option for any of them.

Of course, the competitive context matters. Facebook faced much stiffer competition in these categories than WeChat did; for Facebook to build a better mousetrap in any of these, the requirements were much higher than for WeChat.

Take payments for example. The Chinese largely skipped credit cards, for a whole host of reasons. In part it was due to a cultural aversion to debt, in part because Visa, Mastercard, and American Express weren’t allowed into China where they would certainly have marketed their cards as aggressively as they always do. That meant Alipay and WePay launched competing primarily with cash and all its familiar inconveniences. Compare that to, say, Apple Pay trying to displace the habit of pulling out a credit card in the U.S., especially given how so many people are addicted to credit card points and miles (airline frequent flier programs being another testament to the power of status to influence people’s decision-making).

Making a real dent in new categories like commerce and payments will require a long-term mindset and a ton of resources on the part of Facebook and its subsidiaries like WhatsApp and Instagram. Past efforts to, for example, improve Facebook search, position Facebook as payment option, and introduce virtual assistants on Messenger seem to have been abandoned. Will new efforts like Facebook's cryptocurrency effort or Instagram's push into commerce be given a sufficiently long leash?

Social Network Asymptote 1: Proof of Work

How do you tell when a Status as a Service business will stop growing? What causes networks to suddenly hit that dreaded upper shoulder in the S-curve if, according to Metcalfe's Law, the value of a network grows in proportion to the square of its users? What are the missing variables that explain why networks don’t keep growing until they’ve captured everyone?

The reasons are numerous, let’s focus on social capital theory. To return to our cryptocurrency analogy, the choice of your proof of work is by definition an asymptote because the skills it selects for are not evenly distributed.

To take a specific example, since it's the app du jour, let's look at the app formerly known as Musical.ly, TikTok.

You've probably watched a TikTok video, but have you tried to make one? My guess is that many of you have not and never will (but if you have, please send me a link). This is no judgment, I haven’t either.

You may possess, in your estimation, too much self-dignity to wallow in cringe. Your arthritic joints may not be capable of executing Orange Justice. Whatever the reason, TikTok's creator community is ultimately capped by the nature of its proof of work, no matter how ingenious its creative tools. The same is true of Twitter: the number of people who enjoy crafting witty 140 and now 280-character info nuggets is finite. Every network has some ceiling on its ultimate number of contributors, and it is often a direct function of its proof of work.

Of course, the value and total user size of a network is not just a direct function of its contributor count. Whether you believe in the 1/9/90 rule of social networks or not, it’s directionally true that any network has value to people besides its creators. In fact, for almost every network, the number of lurkers far exceeds the number of active participants. Life may not be a spectator sport, but a lot of social media is.

This isn’t to say that proof of work is bad. In fact, coming up with a constraint that unlocks the creativity of so many people is exactly how Status as a Service businesses achieve product-market fit. Constraints force the type of compression that often begets artistic elegance, and forcing creatives to grapple with a constraint can foster the type of focused exertion that totally unconstrained exploration fails to inspire.

Still, a ceiling is a ceiling. If you want to know the terminal value of a network, the type of proof of work is a key variable to consider. If you want to know why Musical.ly stopped growing and sold to Bytedance, why Douyin will hit a ceiling of users in China (if it hasn’t already), or what the cap of active users is for any social network, first ask yourself how many people have the skill and interest to compete in that arena.

Social Network Asymptote 2: Social Capital Inflation and Devaluation

More terrifying to investors and employees than an asymptote is collapse. Recall the cautionary myth of the fall of Myspace, named after the little known Greek god of vanity Myspakos (Editor’s note: I made that up, it’s actually Narcissus). Why do some social networks, given Metcalfe's Law and its related network effects theories, not only stop growing but even worse, contract and wither away?

To understand the inherent fragility in Status as a Service businesses, we need to understand the volatility of status.

Social Capital Interest Rate Hikes

One of the common traps is the winner's curse for social media. If a social network achieves enough success, it grows to a size that requires the imposition of an algorithmic feed in order to maintain high signal-to-noise for most of its users. It's akin to the Fed trying to manage inflation by raising interest rates.

The problem, of course, is that this now diminishes the distribution of any single post from any single user. One of the most controversial of such decisions was Facebook's change to dampen how much content from Pages would be distributed into the News Feed.

Many institutions, especially news outlets, had turned to Facebook to access some sweet sweet eyeball inventory in News Feeds. They devised all sorts of giveaways and promotions to entice people to follow their Facebook Pages. After gaining followers, a media company had a free license to publish and publish often into their News Feeds, an attractive proposition considering users were opening Facebook multiples times per day. For media companies, who were already struggling to grapple with all the chaos the internet had unleashed on their business models, this felt like upgrading from waving stories at passersby on the street to stapling stories to the inside of eyelids the world over, several times a day. Deterministic, guaranteed eyeballs.

Then, one day, Facebook snapped its fingers like Thanos and much of that dependable reach evaporated into ash. No longer would every one of your Page followers see every one of your posts. Facebook did what central banks do to combat inflation and raised interest rates on borrowing attention from the News Feed.

Was such a move inevitable? Not necessarily, but it was always likely. That’s because there is one scarce resource which is a natural limit on every social network and media company today, and that is user attention. That a social network shares some of that attention with its partners will always be secondary to accumulating and retaining that attention in the first place. Facebook, for example, must always guard against the tragedy of the commons when it comes to News Feed. Saving media institutions is a secondary consideration, if that.

Social Capital Deflation: Scarcity Precarity or the Groucho Marx Conundrum

Another existential risk that is somewhat unique to social networks is this: network effects are powerful, but ones which are social in nature have the unfortunate quality of being just as ferocious in reverse.

In High Growth Handbook by Elad Gil, Marc Andreessen notes:

I think network effects are great, but in a sense they’re a little overrated. The problem with network effects is they unwind just as fast. And so they’re great while they last, but when they reverse, they reverse viciously. Go ask the MySpace guys how their network effect is going. Network effects can create a very strong position, for obvious reasons. But in another sense, it’s a very weak position to be in. Because if it cracks, you just unravel. I always worry when a company thinks the answer is just network effects. How durable are they?

Why do social network effects reverse? Utility, the other axis by which I judge social networks, tends to be uncapped in value. It's rare to describe a product or service as having become too useful. That is, it's hard to over-serve on utility. The more people that accept a form of payment, the more useful it is, like Visa or Mastercard or Alipay. People don’t stop using a service because it’s too useful.

Social network effects are different. If you've lived in New York City, you've likely seen, over and over, night clubs which are so hot for months suddenly go out of business just a short while later. Many types of social capital have qualities which render them fragile. Status relies on coordinated consensus to define the scarcity that determines its value. Consensus can shift in an instant. Recall the friend in Swingers, who, at every crowded LA party, quips, "This place is dead anyway." Or recall the wise words of noted sociologist Groucho Marx: "I don't care to belong to any club that will have me as a member."

The Groucho Marx effect doesn't take effect immediately. In the beginning, a status hierarchy requires lower status people to join so that the higher status people have a sense of just how far above the masses they reside. It's silly to order bottle service at Hakkasan in Las Vegas if no one is sitting on the opposite side of the velvet ropes; a leaderboard with just a single high score is meaningless.

However, there is some tipping point of popularity beyond which a restaurant, club, or social network can lose its cool. When Malcolm Gladwell inserted the term "tipping point" into popular vernacular, he didn't specify which way things were tipping. We tend to glamorize the tipping into rapid diffusion, the toe of the S-curve, but in status games like fashion the arc of popularity traces not an S-curve but a bell curve. At the top of that bell curve, you reach the less glamorous tipping point, the one before the plummet.

When the definition of status is distributed, often one minority has disproportionate sway. If that group, the cool kids, pulls the ripcord, everyone tends to follow them to the exits. In fact, it’s usually the most high status or desirable people who leave first, the evaporative cooling effect of social networks. At that point, that product or service better have moved as far out as possible on the utility axis or the velocity of churn can cause a nose bleed.

[Mimetic desire is a cruel mistress. Girard would've had a field day with the Fyre Festival. Congratulations Billy McFarland, you are the ritual sacrifice with which we cleanse ourselves of the sin of coveting thy influencer’s bounty.]

Fashion is one of the most interesting industries for having understood this recurring boom and bust pattern in network effects and taken ownership of its own status devaluation cycles. Some strange cabal of magazine editors and fashion designers decide each season to declare arbitrarily new styles the fashion of the moment, retiring previous recommendations before they grow stale. There is usually no real utility change at all; functionally, the shirt you buy this season doesn’t do anything the shirt you bought last season still can’t do equally well. The industry as a whole is simply pulling the frontier of scarcity forward like a wave we're all trying to surf.

This season, the color of the moment might be saffron. Why? Because someone cooler than me said so. Tech tends to prioritize growth at all costs given the non-rival, zero marginal cost qualities of digital information. In a world of abundance, that makes sense. However, technology still has much to learn from industries like fashion about how to proactively manage scarcity, which is important when goods are rivalrous. Since many types of status are relative, it is, by definition, rivalrous. There is some equivalent of crop rotation theory which applies to social networks, but it's not part of the standard tech playbook yet.

A variant of this type of status devaluation cascade can be triggered when a particular group joins up. This is because the stability of a status lattice depends just as much on the composition of the network as its total size. A canonical example in tech was the youth migration out of Facebook when their parents signed on in force. Because of the incredible efficiency of News Feed distribution, Facebook became a de facto surveillance apparatus for the young: Mommy and Daddy are watching, as well as future universities and employers and dates who will time travel back and scour your profile someday. As Facebook became less attractive as a platform for the young, many of them flocked to Snapchat as their new messaging solution, its ephemeral nature offering built-in security and its UX opacity acting as a gate against clueless seniors.

I've written before about Snapchat's famously opaque Easter Egg UI as a sort of tamper-proof lid for parents, but if we combine social network utility theory with my post on selfies as a second language, it's also clear that Snapchat is a suboptimal messaging platform for older people whose preferred medium of communication remains text. Snapchat opens to a camera. If you want to text someone, it's extra work to swipe to the left pane to reach the text messaging screen.

I would be shocked if Facebook did not, at one point, contemplate a version of its app that opened to the camera first, instead of the News Feed, considering how many odd clones of other apps it’s considered in the past. If so, it’s good they never shipped it, because for young people, publishing to a graph that still contained their parents would've still been prohibitive, while for old folks who aren't as biased towards visual mediums, such a UI would've been suboptimal. It would've been a disastrous lose-lose for Facebook.

Patrick Collison linked to an interesting paper (PDF) on network effects traps in the physical world. They exist in the virtual world as well, and Status as a Service businesses are particularly fraught with them. Another instance is path dependent user composition. A fervent early adopter group can define who a new social network seems to be for, merely by flooding the service with content they love. Before concerted efforts to personalize the front page more quickly, Pinterest seemed like a service targeted mostly towards women even though its basic toolset are useful to many men as well. Because a new user’s front page usually drew upon pins from their friends already on the service, the earliest cohorts, which leaned female, dominated most new user’s feeds. My earliest Pinterest homepage was an endless collage of makeup, women’s clothing, and home decor because those happened to be some of the things my friends were pinning for a variety of projects.

Groucho Marx was ahead of his time as a social capital philosopher, but we can build upon his work. To his famous aphorism we should add some variants. When it comes to evaporative cooling, two come to mind: “I don’t want to belong to any club that will have those people as a member” and “I don’t want to belong to any club that those people don’t want to be a member of.”

Mitigating Social Capital Devaluation Risk, and the Snapchat Strategy

In a leaked memo late last year, Evan Spiegel wrote about how one of the core values of Snapchat is to make it the fastest way to communicate.

The most durable way for us to grow is by relentlessly focusing on being the fastest way to communicate.

Recently I had the opportunity to use Snapchat v5.0 on an iPhone 4. It had much of Bobby's original code in many of my original graphics. It was way faster than the current version of Snapchat running on my iPhone X.

In our excitement to innovate and bring many new products into the world, we have lost the core of what made Snapchat the fastest way to communicate.

In 2019, we will refocus our company on making Snapchat the fastest way to communicate so that we can unlock the core value of our service for the billions of people who have not yet learned how to use Snapchat. If we aren't able to unlock the core value of Snapchat, we won't ever be able to unlock the full power of our camera.

This will require us to change the way that we work and put our core product value of being the fastest way to communicate at the forefront of everything we do at Snap. It might require us to change our products for different markets where some of our value-add features detract from our core product value.

This clarifies Snapchat's strategy on the 3 axes of my social media framework: Snapchat intends to push out further on the utility axis at the expense of the social capital axis which, as we’ve noted before, is volatile ground to build a long-term business on.

Many will say, especially Snapchat itself, that it has been the anti-Facebook all along. Because it has no likes, it liberates people from destructive status games. To believe that is to underestimate the ingenuity of humanity in its ability to weaponize any network for status games.

Anyone who has studied kids using Snapchat know that it's just as integral a part of high school status and FOMO wars as Facebook, and arguably more so now that those kids largely don’t use Facebook. The only other social media app that is as sharp a stick is Instagram which has, it’s true, more overt social capital accumulation mechanisms. Still, the idea that kids use Snapchat like some pure messaging utility is laughable and makes me wonder if people have forgotten what teenage school life was like. Whether you see people attend a party that you’re not invited to on Instagram or on someone’s Snap, you still feel terrible.

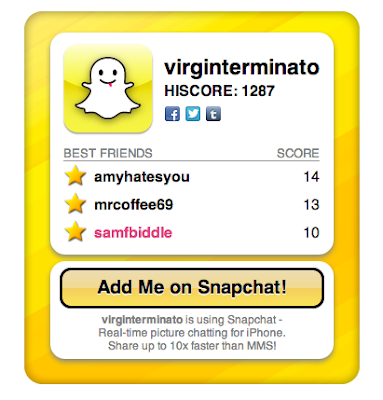

Remember Snapchat's original Best Friends list? I'm going to guess many of my readers don't, because, as noted earlier, old people probably didn't play that status game, if they'd even figured out how to use Snapchat by that point. This was just about as pure a status game feature as could be engineered for teens. Not only did it show the top three people you Snapped with most frequently, you could look at who the top three best friends were for any of your contacts. Essentially, it made the hierarchy of everyone's “friendships” public, making the popularity scoreboard explicit.

I’m glad this didn’t exist when I was in high school, I really didn’t need metrics on how much of a loser I was

You don’t want to know what the proof of work is to achieve Super BFF-dom

As with aggregate follower counts and likes, the Best Friends list was a mechanism for people to accumulate a very specific form of social capital. From a platform perspective, however, there's a big problem with this feature: each user could only have one best friend. It put an artificial ceiling on the amount of social capital one could compete for and accumulate.

In a clever move to unbound social capital accumulation and to turn a zero-sum game into a positive sum game, broadening the number of users working hard or engaging, Snapchat deprecated the very popular Best Friends list and replaced it with streaks.

If you’ve never seen those numbers and emojis on the right of your Snapchat contacts list, no one loves you. Just kidding, it just means you’re old.

If you and a friend Snap back and forth for consecutive days, you build up a streak which is tracked in your friends list. Young people quickly threw their heart and souls into building and maintaining streaks with their friends. This was literally proof of work as proof of friendship, quantified and tracked.